2016-05-06 11:23:48 +02:00

#!/usr/bin/env python3

# Copyright (c) 2014-2016 The Bitcoin Core developers

2021-04-20 21:33:02 +02:00

# Copyright (c) 2014-2021 The Dash Core developers

2014-10-23 03:48:19 +02:00

# Distributed under the MIT software license, see the accompanying

2014-07-08 18:07:23 +02:00

# file COPYING or http://www.opensource.org/licenses/mit-license.php.

2019-01-07 10:55:35 +01:00

""" Base class for RPC testing. """

2017-11-29 19:21:51 +01:00

2018-04-25 15:54:36 +02:00

import configparser

2020-01-04 12:22:41 +01:00

import copy

2017-06-02 11:32:55 +02:00

from enum import Enum

2016-05-10 18:27:31 +02:00

import logging

2021-07-04 00:41:23 +02:00

import argparse

2014-07-08 18:07:23 +02:00

import os

2017-08-11 18:09:51 +02:00

import pdb

2019-05-14 15:00:55 +02:00

import random

2014-07-08 18:07:23 +02:00

import shutil

2017-05-07 15:13:29 +02:00

import sys

2014-07-08 18:07:23 +02:00

import tempfile

2017-03-22 13:03:26 +01:00

import time

2019-01-23 17:36:51 +01:00

from concurrent . futures import ThreadPoolExecutor

2014-07-08 18:07:23 +02:00

2017-06-29 17:37:19 +02:00

from . authproxy import JSONRPCException

from . import coverage

2020-12-09 20:52:11 +01:00

from . messages import (

CTransaction ,

FromHex ,

hash256 ,

msg_islock ,

ser_compact_size ,

ser_string ,

)

2017-08-15 23:34:07 +02:00

from . test_node import TestNode

2018-06-29 18:04:25 +02:00

from . mininode import NetworkThread

2015-10-11 07:41:19 +02:00

from . util import (

2017-05-07 15:13:29 +02:00

PortSeed ,

MAX_NODES ,

2019-06-20 18:37:09 +02:00

assert_equal ,

2017-05-07 15:13:29 +02:00

check_json_precision ,

2015-10-11 07:41:19 +02:00

connect_nodes_bi ,

2018-10-20 16:08:02 +02:00

connect_nodes ,

2019-06-20 18:37:09 +02:00

copy_datadir ,

2017-05-02 20:02:55 +02:00

disconnect_nodes ,

2019-10-09 18:48:12 +02:00

force_finish_mnsync ,

2018-03-14 14:18:44 +01:00

get_datadir_path ,

2020-12-09 20:52:11 +01:00

hex_str_to_bytes ,

2017-05-07 15:13:29 +02:00

initialize_datadir ,

p2p_port ,

set_node_times ,

2020-07-12 21:32:38 +02:00

set_timeout_scale ,

2019-06-20 18:37:09 +02:00

satoshi_round ,

2015-10-11 07:41:19 +02:00

sync_blocks ,

sync_mempools ,

2019-10-01 16:14:26 +02:00

wait_until ,

2021-01-22 15:58:07 +01:00

get_chain_folder ,

2019-10-01 16:14:26 +02:00

)

2014-07-08 18:07:23 +02:00

2017-06-02 11:32:55 +02:00

class TestStatus ( Enum ) :

PASSED = 1

FAILED = 2

SKIPPED = 3

TEST_EXIT_PASSED = 0

TEST_EXIT_FAILED = 1

TEST_EXIT_SKIPPED = 77

2019-08-09 01:14:11 +02:00

GENESISTIME = 1417713337

2018-09-13 12:33:15 +02:00

class SkipTest ( Exception ) :

""" This exception is raised to skip a test """

def __init__ ( self , message ) :

self . message = message

2021-04-08 22:52:05 +02:00

class BitcoinTestMetaClass ( type ) :

""" Metaclass for BitcoinTestFramework.

Ensures that any attempt to register a subclass of ` BitcoinTestFramework `

adheres to a standard whereby the subclass overrides ` set_test_params ` and

` run_test ` but DOES NOT override either ` __init__ ` or ` main ` . If any of

those standards are violated , a ` ` TypeError ` ` is raised . """

def __new__ ( cls , clsname , bases , dct ) :

if not clsname == ' BitcoinTestFramework ' :

if not ( ' run_test ' in dct and ' set_test_params ' in dct ) :

raise TypeError ( " BitcoinTestFramework subclasses must override "

" ' run_test ' and ' set_test_params ' " )

if ' __init__ ' in dct or ' main ' in dct :

raise TypeError ( " BitcoinTestFramework subclasses may not override "

" ' __init__ ' or ' main ' " )

return super ( ) . __new__ ( cls , clsname , bases , dct )

class BitcoinTestFramework ( metaclass = BitcoinTestMetaClass ) :

2017-05-07 15:13:29 +02:00

""" Base class for a bitcoin test script.

2017-09-01 18:47:13 +02:00

Individual bitcoin test scripts should subclass this class and override the set_test_params ( ) and run_test ( ) methods .

Individual tests can also override the following methods to customize the test setup :

2017-05-07 15:13:29 +02:00

- add_options ( )

- setup_chain ( )

- setup_network ( )

2017-09-01 18:47:13 +02:00

- setup_nodes ( )

2017-05-07 15:13:29 +02:00

2017-09-01 18:47:13 +02:00

The __init__ ( ) and main ( ) methods should not be overridden .

2017-05-07 15:13:29 +02:00

This class also contains various public and private helper methods . """

2016-05-20 15:16:51 +02:00

def __init__ ( self ) :

2017-09-01 18:47:13 +02:00

""" Sets test framework defaults. Do not override this method. Instead, override the set_test_params() method """

2021-01-22 15:58:07 +01:00

self . chain = ' regtest '

2016-05-20 15:16:51 +02:00

self . setup_clean_chain = False

2017-06-29 17:37:19 +02:00

self . nodes = [ ]

2018-06-29 18:04:25 +02:00

self . network_thread = None

2017-06-29 17:37:19 +02:00

self . mocktime = 0

2018-12-29 20:18:43 +01:00

self . rpc_timeout = 60 # Wait for up to 60 seconds for the RPC server to respond

2018-01-12 23:24:36 +01:00

self . supports_cli = False

2018-03-07 14:51:58 +01:00

self . bind_to_localhost_only = True

2020-04-16 11:23:49 +02:00

self . extra_args_from_options = [ ]

2017-09-01 18:47:13 +02:00

self . set_test_params ( )

2016-05-20 15:16:51 +02:00

2017-09-01 18:47:13 +02:00

assert hasattr ( self , " num_nodes " ) , " Test must set self.num_nodes in set_test_params() "

2014-07-08 18:07:23 +02:00

def main ( self ) :

2017-09-01 18:47:13 +02:00

""" Main function. This should not be overridden by the subclass test scripts. """

2014-07-08 18:07:23 +02:00

2021-07-04 00:41:23 +02:00

parser = argparse . ArgumentParser ( usage = " %(prog)s [options] " )

parser . add_argument ( " --nocleanup " , dest = " nocleanup " , default = False , action = " store_true " ,

help = " Leave dashds and test.* datadir on exit or error " )

parser . add_argument ( " --noshutdown " , dest = " noshutdown " , default = False , action = " store_true " ,

help = " Don ' t stop dashds after the test execution " )

parser . add_argument ( " --cachedir " , dest = " cachedir " , default = os . path . abspath ( os . path . dirname ( os . path . realpath ( __file__ ) ) + " /../../cache " ) ,

help = " Directory for caching pregenerated datadirs (default: %(default)s ) " )

parser . add_argument ( " --tmpdir " , dest = " tmpdir " , help = " Root directory for datadirs " )

parser . add_argument ( " -l " , " --loglevel " , dest = " loglevel " , default = " INFO " ,

help = " log events at this level and higher to the console. Can be set to DEBUG, INFO, WARNING, ERROR or CRITICAL. Passing --loglevel DEBUG will output all logs to console. Note that logs at all levels are always written to the test_framework.log file in the temporary test directory. " )

parser . add_argument ( " --tracerpc " , dest = " trace_rpc " , default = False , action = " store_true " ,

help = " Print out all RPC calls as they are made " )

parser . add_argument ( " --portseed " , dest = " port_seed " , default = os . getpid ( ) , type = int ,

help = " The seed to use for assigning port numbers (default: current process id) " )

parser . add_argument ( " --coveragedir " , dest = " coveragedir " ,

help = " Write tested RPC commands into this directory " )

parser . add_argument ( " --configfile " , dest = " configfile " ,

default = os . path . abspath ( os . path . dirname ( os . path . realpath ( __file__ ) ) + " /../../config.ini " ) ,

help = " Location of the test framework config file (default: %(default)s ) " )

parser . add_argument ( " --pdbonfailure " , dest = " pdbonfailure " , default = False , action = " store_true " ,

help = " Attach a python debugger if test fails " )

parser . add_argument ( " --usecli " , dest = " usecli " , default = False , action = " store_true " ,

help = " use dash-cli instead of RPC for all commands " )

parser . add_argument ( " --dashd-arg " , dest = " dashd_extra_args " , default = [ ] , action = " append " ,

help = " Pass extra args to all dashd instances " )

parser . add_argument ( " --timeoutscale " , dest = " timeout_scale " , default = 1 , type = int ,

help = " Scale the test timeouts by multiplying them with the here provided value (default: %(default)s ) " )

Merge #14519: tests: add utility to easily profile node performance with perf

13782b8ba8 docs: add perf section to developer docs (James O'Beirne)

58180b5fd4 tests: add utility to easily profile node performance with perf (James O'Beirne)

Pull request description:

Adds a context manager to easily (and selectively) profile node performance during functional test execution using `perf`.

While writing some tests, I encountered some odd bitcoind slowness. I wrote up a utility (`TestNode.profile_with_perf`) that generates performance diagnostics for a node by running `perf` during the execution of a particular region of test code.

`perf` usage is detailed in the excellent (and sadly unmerged) https://github.com/bitcoin/bitcoin/pull/12649; all due props to @eklitzke.

### Example

```python

with node.profile_with_perf("large-msgs"):

for i in range(200):

node.p2p.send_message(some_large_msg)

node.p2p.sync_with_ping()

```

This generates a perf data file in the test node's datadir (`/tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data`).

Running `perf report` generates nice output about where the node spent most of its time while running that part of the test:

```bash

$ perf report -i /tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data --stdio \

| c++filt \

| less

# To display the perf.data header info, please use --header/--header-only options.

#

#

# Total Lost Samples: 0

#

# Samples: 135 of event 'cycles:pp'

# Event count (approx.): 1458205679493582

#

# Children Self Command Shared Object Symbol

# ........ ........ ............... ................... ........................................................................................................................................................................................................................................................................

#

70.14% 0.00% bitcoin-net bitcoind [.] CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

|

---CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

70.14% 0.00% bitcoin-net bitcoind [.] CNetMessage::readData(char const*, unsigned int)

|

---CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

35.52% 0.00% bitcoin-net bitcoind [.] std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

|

---std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

...

```

Tree-SHA512: 9ac4ceaa88818d5eca00994e8e3c8ad42ae019550d6583972a0a4f7b0c4f61032e3d0c476b4ae58756bc5eb8f8015a19a7fc26c095bd588f31d49a37ed0c6b3e

2019-02-05 23:40:11 +01:00

parser . add_argument ( " --perf " , dest = " perf " , default = False , action = " store_true " ,

help = " profile running nodes with perf for the duration of the test " )

2019-05-14 15:00:55 +02:00

parser . add_argument ( " --randomseed " , type = int ,

help = " set a random seed for deterministically reproducing a previous test run " )

2014-07-08 18:07:23 +02:00

self . add_options ( parser )

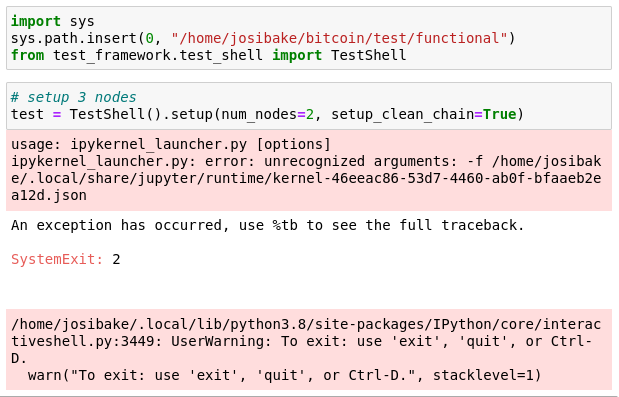

Merge bitcoin/bitcoin#22201: test: Fix TestShell to allow running in Jupyter Notebook

168b6c317ca054c1287c36be532964e861f44266 add dummy file param to fix jupyter (Josiah Baker)

Pull request description:

this fixes argparse to use `parse_known_args`. previously, if an unknown argument was passed, argparse would fail with an `unrecognized arguments: %s` error.

## why

the documentation mentions being able to run `TestShell` in a REPL interpreter or a jupyter notebook. when i tried to run inside a jupyter notebook, i got the following error:

this was due to the notebook passing the filename of the notebook as an argument. this is a known problem with notebooks and argparse, documented here: https://stackoverflow.com/questions/48796169/how-to-fix-ipykernel-launcher-py-error-unrecognized-arguments-in-jupyter

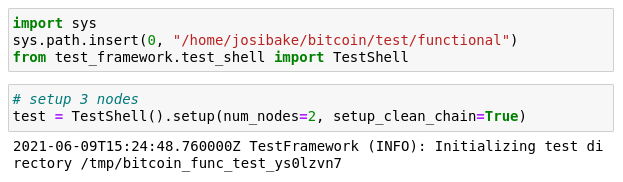

## testing

to test, make sure you have jupyter notebooks installed. you can do this by running:

```

pip install notebook

```

or following instructions from [here](https://jupyterlab.readthedocs.io/en/stable/getting_started/installation.html).

once installed, start a notebook (`jupyter notebook`), launch a python3 kernel and run the following snippet:

```python

import sys

# make sure this is the path for your system

sys.path.insert(0, "/path/to/bitcoin/test/functional")

from test_framework.test_shell import TestShell

test = TestShell().setup(num_nodes=2, setup_clean_chain=True)

```

you should see the following output, without errors:

if you are unfamiliar with notebooks, here is a short guide on using them: https://jupyter.readthedocs.io/en/latest/running.html

ACKs for top commit:

MarcoFalke:

review ACK 168b6c317ca054c1287c36be532964e861f44266

jamesob:

crACK https://github.com/bitcoin/bitcoin/pull/22201/commits/168b6c317ca054c1287c36be532964e861f44266

practicalswift:

cr ACK 168b6c317ca054c1287c36be532964e861f44266

Tree-SHA512: 4fee1563bf64a1cf9009934182412446cde03badf2f19553b78ad2cb3ceb0e5e085a5db41ed440473494ac047f04641311ecbba3948761c6553d0ca4b54937b4

2021-06-22 08:11:14 +02:00

# Running TestShell in a Jupyter notebook causes an additional -f argument

# To keep TestShell from failing with an "unrecognized argument" error, we add a dummy "-f" argument

# source: https://stackoverflow.com/questions/48796169/how-to-fix-ipykernel-launcher-py-error-unrecognized-arguments-in-jupyter/56349168#56349168

parser . add_argument ( " -f " , " --fff " , help = " a dummy argument to fool ipython " , default = " 1 " )

2021-07-04 00:41:23 +02:00

self . options = parser . parse_args ( )

2014-07-08 18:07:23 +02:00

2020-07-12 21:32:38 +02:00

if self . options . timeout_scale < 1 :

raise RuntimeError ( " --timeoutscale can ' t be less than 1 " )

set_timeout_scale ( self . options . timeout_scale )

2016-05-10 18:27:31 +02:00

PortSeed . n = self . options . port_seed

2014-07-08 18:07:23 +02:00

check_json_precision ( )

2017-10-18 16:52:44 +02:00

self . options . cachedir = os . path . abspath ( self . options . cachedir )

2018-04-25 15:54:36 +02:00

config = configparser . ConfigParser ( )

config . read_file ( open ( self . options . configfile ) )

2020-07-21 23:15:35 +02:00

self . options . bitcoind = os . getenv ( " BITCOIND " , default = config [ " environment " ] [ " BUILDDIR " ] + ' /src/dashd ' + config [ " environment " ] [ " EXEEXT " ] )

self . options . bitcoincli = os . getenv ( " BITCOINCLI " , default = config [ " environment " ] [ " BUILDDIR " ] + ' /src/dash-cli ' + config [ " environment " ] [ " EXEEXT " ] )

2018-04-25 15:54:36 +02:00

2020-04-16 11:23:49 +02:00

self . extra_args_from_options = self . options . dashd_extra_args

2018-06-01 10:56:53 +02:00

os . environ [ ' PATH ' ] = os . pathsep . join ( [

os . path . join ( config [ ' environment ' ] [ ' BUILDDIR ' ] , ' src ' ) ,

os . path . join ( config [ ' environment ' ] [ ' BUILDDIR ' ] , ' src ' , ' qt ' ) ,

os . environ [ ' PATH ' ]

] )

2018-05-09 10:41:40 +02:00

2017-03-09 21:16:20 +01:00

# Set up temp directory and start logging

2017-05-22 08:59:11 +02:00

if self . options . tmpdir :

2017-10-18 16:52:44 +02:00

self . options . tmpdir = os . path . abspath ( self . options . tmpdir )

2017-05-22 08:59:11 +02:00

os . makedirs ( self . options . tmpdir , exist_ok = False )

else :

self . options . tmpdir = tempfile . mkdtemp ( prefix = " test " )

2017-03-09 21:16:20 +01:00

self . _start_logging ( )

2019-05-14 15:00:55 +02:00

# Seed the PRNG. Note that test runs are reproducible if and only if

# a single thread accesses the PRNG. For more information, see

# https://docs.python.org/3/library/random.html#notes-on-reproducibility.

# The network thread shouldn't access random. If we need to change the

# network thread to access randomness, it should instantiate its own

# random.Random object.

seed = self . options . randomseed

if seed is None :

seed = random . randrange ( sys . maxsize )

else :

self . log . debug ( " User supplied random seed {} " . format ( seed ) )

random . seed ( seed )

self . log . debug ( " PRNG seed is: {} " . format ( seed ) )

2018-06-29 18:04:25 +02:00

self . log . debug ( ' Setting up network thread ' )

self . network_thread = NetworkThread ( )

self . network_thread . start ( )

2017-06-02 11:32:55 +02:00

success = TestStatus . FAILED

2017-03-09 21:16:20 +01:00

2014-07-08 18:07:23 +02:00

try :

2018-10-04 03:31:29 +02:00

if self . options . usecli :

if not self . supports_cli :

raise SkipTest ( " --usecli specified but test does not support using CLI " )

self . skip_if_no_cli ( )

2018-09-13 12:33:15 +02:00

self . skip_test_if_missing_module ( )

2014-10-20 14:14:04 +02:00

self . setup_chain ( )

self . setup_network ( )

2018-09-10 22:58:15 +02:00

self . import_deterministic_coinbase_privkeys ( )

2014-10-20 14:14:04 +02:00

self . run_test ( )

2017-06-02 11:32:55 +02:00

success = TestStatus . PASSED

2020-09-27 07:43:00 +02:00

except JSONRPCException :

2017-03-09 21:16:20 +01:00

self . log . exception ( " JSONRPC error " )

2017-06-02 11:32:55 +02:00

except SkipTest as e :

self . log . warning ( " Test Skipped: %s " % e . message )

success = TestStatus . SKIPPED

2020-09-27 07:43:00 +02:00

except AssertionError :

2017-03-09 21:16:20 +01:00

self . log . exception ( " Assertion failed " )

2020-09-27 07:43:00 +02:00

except KeyError :

2017-03-09 21:16:20 +01:00

self . log . exception ( " Key error " )

2020-09-27 07:43:00 +02:00

except Exception :

2017-03-09 21:16:20 +01:00

self . log . exception ( " Unexpected exception caught during testing " )

2020-09-27 07:43:00 +02:00

except KeyboardInterrupt :

2017-03-09 21:16:20 +01:00

self . log . warning ( " Exiting after keyboard interrupt " )

2014-07-08 18:07:23 +02:00

2017-08-11 18:09:51 +02:00

if success == TestStatus . FAILED and self . options . pdbonfailure :

print ( " Testcase failed. Attaching python debugger. Enter ? for help " )

pdb . set_trace ( )

2018-06-29 18:04:25 +02:00

self . log . debug ( ' Closing down network thread ' )

self . network_thread . close ( )

2015-04-23 14:19:00 +02:00

if not self . options . noshutdown :

2017-03-09 21:16:20 +01:00

self . log . info ( " Stopping nodes " )

2019-02-21 19:37:16 +01:00

try :

2017-06-02 11:32:55 +02:00

if self . nodes :

self . stop_nodes ( )

2020-09-27 07:43:00 +02:00

except BaseException :

2019-02-21 19:37:16 +01:00

success = False

2019-03-08 09:05:00 +01:00

self . log . exception ( " Unexpected exception caught during shutdown " )

2015-04-23 14:19:00 +02:00

else :

2018-04-08 17:05:44 +02:00

for node in self . nodes :

node . cleanup_on_exit = False

2017-03-09 21:16:20 +01:00

self . log . info ( " Note: dashds were not stopped and may still be running " )

2015-04-20 11:50:33 +02:00

Merge #14519: tests: add utility to easily profile node performance with perf

13782b8ba8 docs: add perf section to developer docs (James O'Beirne)

58180b5fd4 tests: add utility to easily profile node performance with perf (James O'Beirne)

Pull request description:

Adds a context manager to easily (and selectively) profile node performance during functional test execution using `perf`.

While writing some tests, I encountered some odd bitcoind slowness. I wrote up a utility (`TestNode.profile_with_perf`) that generates performance diagnostics for a node by running `perf` during the execution of a particular region of test code.

`perf` usage is detailed in the excellent (and sadly unmerged) https://github.com/bitcoin/bitcoin/pull/12649; all due props to @eklitzke.

### Example

```python

with node.profile_with_perf("large-msgs"):

for i in range(200):

node.p2p.send_message(some_large_msg)

node.p2p.sync_with_ping()

```

This generates a perf data file in the test node's datadir (`/tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data`).

Running `perf report` generates nice output about where the node spent most of its time while running that part of the test:

```bash

$ perf report -i /tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data --stdio \

| c++filt \

| less

# To display the perf.data header info, please use --header/--header-only options.

#

#

# Total Lost Samples: 0

#

# Samples: 135 of event 'cycles:pp'

# Event count (approx.): 1458205679493582

#

# Children Self Command Shared Object Symbol

# ........ ........ ............... ................... ........................................................................................................................................................................................................................................................................

#

70.14% 0.00% bitcoin-net bitcoind [.] CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

|

---CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

70.14% 0.00% bitcoin-net bitcoind [.] CNetMessage::readData(char const*, unsigned int)

|

---CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

35.52% 0.00% bitcoin-net bitcoind [.] std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

|

---std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

...

```

Tree-SHA512: 9ac4ceaa88818d5eca00994e8e3c8ad42ae019550d6583972a0a4f7b0c4f61032e3d0c476b4ae58756bc5eb8f8015a19a7fc26c095bd588f31d49a37ed0c6b3e

2019-02-05 23:40:11 +01:00

should_clean_up = (

not self . options . nocleanup and

not self . options . noshutdown and

success != TestStatus . FAILED and

not self . options . perf

)

if should_clean_up :

2018-02-12 11:30:17 +01:00

self . log . info ( " Cleaning up {} on exit " . format ( self . options . tmpdir ) )

cleanup_tree_on_exit = True

Merge #14519: tests: add utility to easily profile node performance with perf

13782b8ba8 docs: add perf section to developer docs (James O'Beirne)

58180b5fd4 tests: add utility to easily profile node performance with perf (James O'Beirne)

Pull request description:

Adds a context manager to easily (and selectively) profile node performance during functional test execution using `perf`.

While writing some tests, I encountered some odd bitcoind slowness. I wrote up a utility (`TestNode.profile_with_perf`) that generates performance diagnostics for a node by running `perf` during the execution of a particular region of test code.

`perf` usage is detailed in the excellent (and sadly unmerged) https://github.com/bitcoin/bitcoin/pull/12649; all due props to @eklitzke.

### Example

```python

with node.profile_with_perf("large-msgs"):

for i in range(200):

node.p2p.send_message(some_large_msg)

node.p2p.sync_with_ping()

```

This generates a perf data file in the test node's datadir (`/tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data`).

Running `perf report` generates nice output about where the node spent most of its time while running that part of the test:

```bash

$ perf report -i /tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data --stdio \

| c++filt \

| less

# To display the perf.data header info, please use --header/--header-only options.

#

#

# Total Lost Samples: 0

#

# Samples: 135 of event 'cycles:pp'

# Event count (approx.): 1458205679493582

#

# Children Self Command Shared Object Symbol

# ........ ........ ............... ................... ........................................................................................................................................................................................................................................................................

#

70.14% 0.00% bitcoin-net bitcoind [.] CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

|

---CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

70.14% 0.00% bitcoin-net bitcoind [.] CNetMessage::readData(char const*, unsigned int)

|

---CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

35.52% 0.00% bitcoin-net bitcoind [.] std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

|

---std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

...

```

Tree-SHA512: 9ac4ceaa88818d5eca00994e8e3c8ad42ae019550d6583972a0a4f7b0c4f61032e3d0c476b4ae58756bc5eb8f8015a19a7fc26c095bd588f31d49a37ed0c6b3e

2019-02-05 23:40:11 +01:00

elif self . options . perf :

self . log . warning ( " Not cleaning up dir {} due to perf data " . format ( self . options . tmpdir ) )

cleanup_tree_on_exit = False

2016-05-25 11:52:25 +02:00

else :

Merge #14519: tests: add utility to easily profile node performance with perf

13782b8ba8 docs: add perf section to developer docs (James O'Beirne)

58180b5fd4 tests: add utility to easily profile node performance with perf (James O'Beirne)

Pull request description:

Adds a context manager to easily (and selectively) profile node performance during functional test execution using `perf`.

While writing some tests, I encountered some odd bitcoind slowness. I wrote up a utility (`TestNode.profile_with_perf`) that generates performance diagnostics for a node by running `perf` during the execution of a particular region of test code.

`perf` usage is detailed in the excellent (and sadly unmerged) https://github.com/bitcoin/bitcoin/pull/12649; all due props to @eklitzke.

### Example

```python

with node.profile_with_perf("large-msgs"):

for i in range(200):

node.p2p.send_message(some_large_msg)

node.p2p.sync_with_ping()

```

This generates a perf data file in the test node's datadir (`/tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data`).

Running `perf report` generates nice output about where the node spent most of its time while running that part of the test:

```bash

$ perf report -i /tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data --stdio \

| c++filt \

| less

# To display the perf.data header info, please use --header/--header-only options.

#

#

# Total Lost Samples: 0

#

# Samples: 135 of event 'cycles:pp'

# Event count (approx.): 1458205679493582

#

# Children Self Command Shared Object Symbol

# ........ ........ ............... ................... ........................................................................................................................................................................................................................................................................

#

70.14% 0.00% bitcoin-net bitcoind [.] CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

|

---CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

70.14% 0.00% bitcoin-net bitcoind [.] CNetMessage::readData(char const*, unsigned int)

|

---CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

35.52% 0.00% bitcoin-net bitcoind [.] std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

|

---std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

...

```

Tree-SHA512: 9ac4ceaa88818d5eca00994e8e3c8ad42ae019550d6583972a0a4f7b0c4f61032e3d0c476b4ae58756bc5eb8f8015a19a7fc26c095bd588f31d49a37ed0c6b3e

2019-02-05 23:40:11 +01:00

self . log . warning ( " Not cleaning up dir {} " . format ( self . options . tmpdir ) )

2018-02-12 11:30:17 +01:00

cleanup_tree_on_exit = False

2017-06-02 11:32:55 +02:00

if success == TestStatus . PASSED :

2017-03-09 21:16:20 +01:00

self . log . info ( " Tests successful " )

2017-11-29 19:21:51 +01:00

exit_code = TEST_EXIT_PASSED

2017-06-02 11:32:55 +02:00

elif success == TestStatus . SKIPPED :

self . log . info ( " Test skipped " )

2017-11-29 19:21:51 +01:00

exit_code = TEST_EXIT_SKIPPED

2014-07-08 18:07:23 +02:00

else :

2017-03-09 21:16:20 +01:00

self . log . error ( " Test failed. Test logging available at %s /test_framework.log " , self . options . tmpdir )

2017-11-29 19:21:51 +01:00

self . log . error ( " Hint: Call {} ' {} ' to consolidate all logs " . format ( os . path . normpath ( os . path . dirname ( os . path . realpath ( __file__ ) ) + " /../combine_logs.py " ) , self . options . tmpdir ) )

exit_code = TEST_EXIT_FAILED

logging . shutdown ( )

2018-02-12 11:30:17 +01:00

if cleanup_tree_on_exit :

shutil . rmtree ( self . options . tmpdir )

2017-11-29 19:21:51 +01:00

sys . exit ( exit_code )

2015-04-28 18:39:47 +02:00

2017-09-01 18:47:13 +02:00

# Methods to override in subclass test scripts.

def set_test_params ( self ) :

2020-12-27 10:42:48 +01:00

""" Tests must override this method to change default values for number of nodes, topology, etc """

2017-09-01 18:47:13 +02:00

raise NotImplementedError

2017-05-07 15:13:29 +02:00

2017-09-01 18:47:13 +02:00

def add_options ( self , parser ) :

""" Override this method to add command-line options to the test """

pass

2017-06-29 17:37:19 +02:00

2018-09-13 12:33:15 +02:00

def skip_test_if_missing_module ( self ) :

""" Override this method to skip a test if a module is not compiled """

pass

2017-09-01 18:47:13 +02:00

def setup_chain ( self ) :

""" Override this method to customize blockchain setup """

self . log . info ( " Initializing test directory " + self . options . tmpdir )

if self . setup_clean_chain :

self . _initialize_chain_clean ( )

self . set_genesis_mocktime ( )

else :

self . _initialize_chain ( )

self . set_cache_mocktime ( )

2017-06-29 17:37:19 +02:00

2017-09-01 18:47:13 +02:00

def setup_network ( self ) :

""" Override this method to customize test network topology """

self . setup_nodes ( )

# Connect the nodes as a "chain". This allows us

# to split the network between nodes 1 and 2 to get

# two halves that can work on competing chains.

for i in range ( self . num_nodes - 1 ) :

connect_nodes_bi ( self . nodes , i , i + 1 )

self . sync_all ( )

def setup_nodes ( self ) :

""" Override this method to customize test node setup """

extra_args = None

if hasattr ( self , " extra_args " ) :

extra_args = self . extra_args

2021-06-17 19:05:11 +02:00

self . add_nodes ( self . num_nodes , extra_args )

2017-09-01 18:47:13 +02:00

self . start_nodes ( )

2018-09-10 22:58:15 +02:00

def import_deterministic_coinbase_privkeys ( self ) :

if self . setup_clean_chain :

return

for n in self . nodes :

try :

n . getwalletinfo ( )

except JSONRPCException as e :

assert str ( e ) . startswith ( ' Method not found ' )

continue

2018-09-17 20:00:41 +02:00

n . importprivkey ( n . get_deterministic_priv_key ( ) . key )

2018-09-10 22:58:15 +02:00

2017-09-01 18:47:13 +02:00

def run_test ( self ) :

""" Tests must override this method to define test logic """

raise NotImplementedError

2017-06-29 17:37:19 +02:00

2017-09-01 18:47:13 +02:00

# Public helper methods. These can be accessed by the subclass test scripts.

2017-06-29 17:37:19 +02:00

2018-08-02 14:31:47 +02:00

def add_nodes ( self , num_nodes , extra_args = None , * , rpchost = None , binary = None ) :

2017-09-01 18:47:13 +02:00

""" Instantiate TestNode objects """

2018-03-07 14:51:58 +01:00

if self . bind_to_localhost_only :

extra_confs = [ [ " bind=127.0.0.1 " ] ] * num_nodes

else :

extra_confs = [ [ ] ] * num_nodes

2017-06-29 17:37:19 +02:00

if extra_args is None :

2017-08-15 23:34:07 +02:00

extra_args = [ [ ] ] * num_nodes

2017-06-29 17:37:19 +02:00

if binary is None :

2018-04-25 15:54:36 +02:00

binary = [ self . options . bitcoind ] * num_nodes

2018-03-07 14:51:58 +01:00

assert_equal ( len ( extra_confs ) , num_nodes )

2017-06-29 17:37:19 +02:00

assert_equal ( len ( extra_args ) , num_nodes )

assert_equal ( len ( binary ) , num_nodes )

2017-09-01 18:47:13 +02:00

for i in range ( num_nodes ) :

2018-12-13 14:58:57 +01:00

numnode = len ( self . nodes )

Merge #14519: tests: add utility to easily profile node performance with perf

13782b8ba8 docs: add perf section to developer docs (James O'Beirne)

58180b5fd4 tests: add utility to easily profile node performance with perf (James O'Beirne)

Pull request description:

Adds a context manager to easily (and selectively) profile node performance during functional test execution using `perf`.

While writing some tests, I encountered some odd bitcoind slowness. I wrote up a utility (`TestNode.profile_with_perf`) that generates performance diagnostics for a node by running `perf` during the execution of a particular region of test code.

`perf` usage is detailed in the excellent (and sadly unmerged) https://github.com/bitcoin/bitcoin/pull/12649; all due props to @eklitzke.

### Example

```python

with node.profile_with_perf("large-msgs"):

for i in range(200):

node.p2p.send_message(some_large_msg)

node.p2p.sync_with_ping()

```

This generates a perf data file in the test node's datadir (`/tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data`).

Running `perf report` generates nice output about where the node spent most of its time while running that part of the test:

```bash

$ perf report -i /tmp/testtxmpod0y/node0/node-0-TestName-large-msgs.perf.data --stdio \

| c++filt \

| less

# To display the perf.data header info, please use --header/--header-only options.

#

#

# Total Lost Samples: 0

#

# Samples: 135 of event 'cycles:pp'

# Event count (approx.): 1458205679493582

#

# Children Self Command Shared Object Symbol

# ........ ........ ............... ................... ........................................................................................................................................................................................................................................................................

#

70.14% 0.00% bitcoin-net bitcoind [.] CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

|

---CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

70.14% 0.00% bitcoin-net bitcoind [.] CNetMessage::readData(char const*, unsigned int)

|

---CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

35.52% 0.00% bitcoin-net bitcoind [.] std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

|

---std::vector<char, zero_after_free_allocator<char> >::_M_fill_insert(__gnu_cxx::__normal_iterator<char*, std::vector<char, zero_after_free_allocator<char> > >, unsigned long, char const&)

CNetMessage::readData(char const*, unsigned int)

CNode::ReceiveMsgBytes(char const*, unsigned int, bool&)

...

```

Tree-SHA512: 9ac4ceaa88818d5eca00994e8e3c8ad42ae019550d6583972a0a4f7b0c4f61032e3d0c476b4ae58756bc5eb8f8015a19a7fc26c095bd588f31d49a37ed0c6b3e

2019-02-05 23:40:11 +01:00

self . nodes . append ( TestNode ( numnode , get_datadir_path ( self . options . tmpdir , numnode ) , self . extra_args_from_options , chain = self . chain , rpchost = rpchost , timewait = self . rpc_timeout , bitcoind = binary [ i ] , bitcoin_cli = self . options . bitcoincli , mocktime = self . mocktime , coverage_dir = self . options . coveragedir , extra_conf = extra_confs [ i ] , extra_args = extra_args [ i ] , use_cli = self . options . usecli , start_perf = self . options . perf ) )

2017-09-01 18:47:13 +02:00

2018-01-19 17:44:27 +01:00

def start_node ( self , i , * args , * * kwargs ) :

2019-09-26 15:50:24 +02:00

""" Start a dashd """

2017-09-01 18:47:13 +02:00

node = self . nodes [ i ]

2018-01-19 17:44:27 +01:00

node . start ( * args , * * kwargs )

2017-09-01 18:47:13 +02:00

node . wait_for_rpc_connection ( )

if self . options . coveragedir is not None :

coverage . write_all_rpc_commands ( self . options . coveragedir , node . rpc )

2021-06-17 19:05:11 +02:00

def start_nodes ( self , extra_args = None , * args , * * kwargs ) :

2017-09-01 18:47:13 +02:00

""" Start multiple dashds """

if extra_args is None :

extra_args = [ None ] * self . num_nodes

assert_equal ( len ( extra_args ) , self . num_nodes )

2017-06-29 17:37:19 +02:00

try :

2017-09-01 18:47:13 +02:00

for i , node in enumerate ( self . nodes ) :

2021-06-17 19:05:11 +02:00

node . start ( extra_args [ i ] , * args , * * kwargs )

2017-09-01 18:47:13 +02:00

for node in self . nodes :

2017-08-15 23:34:07 +02:00

node . wait_for_rpc_connection ( )

2017-06-29 17:37:19 +02:00

except :

# If one node failed to start, stop the others

self . stop_nodes ( )

raise

2017-08-15 23:34:07 +02:00

if self . options . coveragedir is not None :

2017-09-01 18:47:13 +02:00

for node in self . nodes :

2017-08-15 23:34:07 +02:00

coverage . write_all_rpc_commands ( self . options . coveragedir , node . rpc )

2021-06-17 19:05:11 +02:00

def stop_node ( self , i , expected_stderr = ' ' , wait = 0 ) :

2019-08-09 01:21:57 +02:00

""" Stop a dashd test node """

2021-06-17 19:05:11 +02:00

self . nodes [ i ] . stop_node ( expected_stderr = expected_stderr , wait = wait )

2017-09-06 20:02:08 +02:00

self . nodes [ i ] . wait_until_stopped ( )

2019-08-09 01:19:22 +02:00

2021-06-17 19:05:11 +02:00

def stop_nodes ( self , expected_stderr = ' ' , wait = 0 ) :

2019-08-09 01:21:57 +02:00

""" Stop multiple dashd test nodes """

2017-08-15 23:34:07 +02:00

for node in self . nodes :

# Issue RPC to stop nodes

2021-06-17 19:05:11 +02:00

node . stop_node ( expected_stderr = expected_stderr , wait = wait )

2017-06-29 17:37:19 +02:00

2017-08-15 23:34:07 +02:00

for node in self . nodes :

# Wait for nodes to stop

2017-09-06 20:02:08 +02:00

node . wait_until_stopped ( )

2017-06-29 17:37:19 +02:00

2021-06-17 19:05:11 +02:00

def restart_node ( self , i , extra_args = None , expected_stderr = ' ' ) :

2017-09-25 13:16:08 +02:00

""" Stop and start a test node """

2021-06-17 19:05:11 +02:00

self . stop_node ( i , expected_stderr )

2017-09-25 13:16:08 +02:00

self . start_node ( i , extra_args )

2017-06-29 17:37:19 +02:00

def wait_for_node_exit ( self , i , timeout ) :

2017-08-15 23:34:07 +02:00

self . nodes [ i ] . process . wait ( timeout )

2017-05-07 15:13:29 +02:00

def split_network ( self ) :

"""

Split the network of four nodes into nodes 0 / 1 and 2 / 3.

"""

disconnect_nodes ( self . nodes [ 1 ] , 2 )

disconnect_nodes ( self . nodes [ 2 ] , 1 )

2020-04-14 12:00:16 +02:00

self . sync_all ( self . nodes [ : 2 ] )

self . sync_all ( self . nodes [ 2 : ] )

2017-05-07 15:13:29 +02:00

def join_network ( self ) :

"""

Join the ( previously split ) network halves together .

"""

connect_nodes_bi ( self . nodes , 1 , 2 )

self . sync_all ( )

2020-04-14 12:00:16 +02:00

def sync_blocks ( self , nodes = None , * * kwargs ) :

sync_blocks ( nodes or self . nodes , * * kwargs )

2017-05-07 15:13:29 +02:00

2020-04-14 12:00:16 +02:00

def sync_mempools ( self , nodes = None , * * kwargs ) :

if self . mocktime != 0 :

if ' wait ' not in kwargs :

kwargs [ ' wait ' ] = 0.1

if ' wait_func ' not in kwargs :

2020-04-12 07:44:31 +02:00

kwargs [ ' wait_func ' ] = lambda : self . bump_mocktime ( 3 , nodes = nodes )

2020-04-14 12:00:16 +02:00

sync_mempools ( nodes or self . nodes , * * kwargs )

def sync_all ( self , nodes = None , * * kwargs ) :

self . sync_blocks ( nodes , * * kwargs )

self . sync_mempools ( nodes , * * kwargs )

2017-05-07 15:13:29 +02:00

2019-08-09 01:14:11 +02:00

def disable_mocktime ( self ) :

self . mocktime = 0

2020-04-17 07:52:06 +02:00

for node in self . nodes :

node . mocktime = 0

2017-06-29 17:37:19 +02:00

2020-04-12 07:44:31 +02:00

def bump_mocktime ( self , t , update_nodes = True , nodes = None ) :

2019-08-09 01:14:11 +02:00

self . mocktime + = t

2020-04-08 22:14:39 +02:00

if update_nodes :

2020-04-14 12:00:16 +02:00

set_node_times ( nodes or self . nodes , self . mocktime )

2017-06-29 17:37:19 +02:00

2019-08-09 01:14:11 +02:00

def set_cache_mocktime ( self ) :

# For backwared compatibility of the python scripts

# with previous versions of the cache, set MOCKTIME

# to regtest genesis time + (201 * 156)

self . mocktime = GENESISTIME + ( 201 * 156 )

2020-04-17 07:52:06 +02:00

for node in self . nodes :

node . mocktime = self . mocktime

2017-06-29 17:37:19 +02:00

2019-08-09 01:14:11 +02:00

def set_genesis_mocktime ( self ) :

self . mocktime = GENESISTIME

2020-04-17 07:52:06 +02:00

for node in self . nodes :

node . mocktime = self . mocktime

2017-06-29 17:37:19 +02:00

2017-05-07 15:13:29 +02:00

# Private helper methods. These should not be accessed by the subclass test scripts.

2019-02-26 23:04:56 +01:00

def _start_logging ( self ) :

# Add logger and logging handlers

self . log = logging . getLogger ( ' TestFramework ' )

self . log . setLevel ( logging . DEBUG )

# Create file handler to log all messages

2018-06-12 14:52:26 +02:00

fh = logging . FileHandler ( self . options . tmpdir + ' /test_framework.log ' , encoding = ' utf-8 ' )

2019-02-26 23:04:56 +01:00

fh . setLevel ( logging . DEBUG )

# Create console handler to log messages to stderr. By default this logs only error messages, but can be configured with --loglevel.

ch = logging . StreamHandler ( sys . stdout )

# User can provide log level as a number or string (eg DEBUG). loglevel was caught as a string, so try to convert it to an int

ll = int ( self . options . loglevel ) if self . options . loglevel . isdigit ( ) else self . options . loglevel . upper ( )

ch . setLevel ( ll )

2019-08-09 01:21:57 +02:00

# Format logs the same as dashd's debug.log with microprecision (so log files can be concatenated and sorted)

2018-03-10 14:08:15 +01:00

formatter = logging . Formatter ( fmt = ' %(asctime)s . %(msecs)03d 000Z %(name)s ( %(levelname)s ): %(message)s ' , datefmt = ' % Y- % m- %d T % H: % M: % S ' )

2017-03-22 13:03:26 +01:00

formatter . converter = time . gmtime

2019-02-26 23:04:56 +01:00

fh . setFormatter ( formatter )

ch . setFormatter ( formatter )

# add the handlers to the logger

self . log . addHandler ( fh )

self . log . addHandler ( ch )

if self . options . trace_rpc :

rpc_logger = logging . getLogger ( " BitcoinRPC " )

rpc_logger . setLevel ( logging . DEBUG )

rpc_handler = logging . StreamHandler ( sys . stdout )

rpc_handler . setLevel ( logging . DEBUG )

rpc_logger . addHandler ( rpc_handler )

2021-06-17 19:05:11 +02:00

def _initialize_chain ( self , extra_args = None ) :

2017-05-07 15:13:29 +02:00

""" Initialize a pre-mined blockchain for use by the test.

Create a cache of a 200 - block - long chain ( with wallet ) for MAX_NODES

Afterward , create num_nodes copies from the cache . """

2017-09-01 18:47:13 +02:00

assert self . num_nodes < = MAX_NODES

2017-05-07 15:13:29 +02:00

create_cache = False

for i in range ( MAX_NODES ) :

2018-03-14 14:18:44 +01:00

if not os . path . isdir ( get_datadir_path ( self . options . cachedir , i ) ) :

2017-05-07 15:13:29 +02:00

create_cache = True

break

if create_cache :

self . log . debug ( " Creating data directories from cached datadir " )

# find and delete old cache directories if any exist

for i in range ( MAX_NODES ) :

2018-03-14 14:18:44 +01:00

if os . path . isdir ( get_datadir_path ( self . options . cachedir , i ) ) :

shutil . rmtree ( get_datadir_path ( self . options . cachedir , i ) )

2017-05-07 15:13:29 +02:00

# Create cache directories, run dashds:

2019-08-09 01:14:11 +02:00

self . set_genesis_mocktime ( )

2017-05-07 15:13:29 +02:00

for i in range ( MAX_NODES ) :

2021-01-22 15:58:07 +01:00

datadir = initialize_datadir ( self . options . cachedir , i , self . chain )

2018-09-10 22:58:15 +02:00

args = [ self . options . bitcoind , " -datadir= " + datadir , " -mocktime= " + str ( GENESISTIME ) , ' -disablewallet ' ]

2017-05-07 15:13:29 +02:00

if i > 0 :

args . append ( " -connect=127.0.0.1: " + str ( p2p_port ( 0 ) ) )

2019-06-20 18:36:17 +02:00

if extra_args is not None :

args . extend ( extra_args )

2018-12-29 20:18:43 +01:00

self . nodes . append ( TestNode ( i , get_datadir_path ( self . options . cachedir , i ) , chain = self . chain , extra_conf = [ " bind=127.0.0.1 " ] , extra_args = [ ] , extra_args_from_options = self . extra_args_from_options , rpchost = None ,

timewait = self . rpc_timeout ,

bitcoind = self . options . bitcoind ,

bitcoin_cli = self . options . bitcoincli ,

mocktime = self . mocktime ,

coverage_dir = None ,

) )

2017-08-15 23:34:07 +02:00

self . nodes [ i ] . args = args

2017-09-01 18:47:13 +02:00

self . start_node ( i )

2017-05-07 15:13:29 +02:00

2017-08-15 23:34:07 +02:00

# Wait for RPC connections to be ready

for node in self . nodes :

node . wait_for_rpc_connection ( )

2017-05-07 15:13:29 +02:00

# Create a 200-block-long chain; each of the 4 first nodes

# gets 25 mature blocks and 25 immature.

# Note: To preserve compatibility with older versions of

# initialize_chain, only 4 nodes will generate coins.

#

# blocks are created with timestamps 10 minutes apart

# starting from 2010 minutes in the past

2019-06-19 20:01:39 +02:00

block_time = GENESISTIME

2017-05-07 15:13:29 +02:00

for i in range ( 2 ) :

for peer in range ( 4 ) :

for j in range ( 25 ) :

set_node_times ( self . nodes , block_time )

2018-09-17 20:00:41 +02:00

self . nodes [ peer ] . generatetoaddress ( 1 , self . nodes [ peer ] . get_deterministic_priv_key ( ) . address )

2019-06-19 20:01:39 +02:00

block_time + = 156

2017-05-07 15:13:29 +02:00

# Must sync before next peer starts generating blocks

2020-04-14 12:00:16 +02:00

self . sync_blocks ( )

2017-05-07 15:13:29 +02:00

# Shut them down, and clean up cache directories:

self . stop_nodes ( )

self . nodes = [ ]

2017-06-29 17:37:19 +02:00

self . disable_mocktime ( )

2018-03-14 14:18:44 +01:00

def cache_path ( n , * paths ) :

2021-01-22 15:58:07 +01:00

chain = get_chain_folder ( get_datadir_path ( self . options . cachedir , n ) , self . chain )

return os . path . join ( get_datadir_path ( self . options . cachedir , n ) , chain , * paths )

2018-03-14 14:18:44 +01:00

2017-05-07 15:13:29 +02:00

for i in range ( MAX_NODES ) :

2018-09-10 22:58:15 +02:00

os . rmdir ( cache_path ( i , ' wallets ' ) ) # Remove empty wallets dir

2018-03-14 14:18:44 +01:00

for entry in os . listdir ( cache_path ( i ) ) :

2018-09-10 22:58:15 +02:00

if entry not in [ ' chainstate ' , ' blocks ' , ' indexes ' , ' evodb ' , ' llmq ' , ' backups ' ] :

2018-03-14 14:18:44 +01:00

os . remove ( cache_path ( i , entry ) )

2017-09-01 18:47:13 +02:00

for i in range ( self . num_nodes ) :

2018-03-14 14:18:44 +01:00

from_dir = get_datadir_path ( self . options . cachedir , i )

to_dir = get_datadir_path ( self . options . tmpdir , i )

2017-05-07 15:13:29 +02:00

shutil . copytree ( from_dir , to_dir )

2021-01-22 15:58:07 +01:00

initialize_datadir ( self . options . tmpdir , i , self . chain ) # Overwrite port/rpcport in dash.conf

2017-05-07 15:13:29 +02:00

2017-09-01 18:47:13 +02:00

def _initialize_chain_clean ( self ) :

2017-05-07 15:13:29 +02:00

""" Initialize empty blockchain for use by the test.

Create an empty blockchain and num_nodes wallets .

Useful if a test case wants complete control over initialization . """

2017-09-01 18:47:13 +02:00

for i in range ( self . num_nodes ) :

2021-01-22 15:58:07 +01:00

initialize_datadir ( self . options . tmpdir , i , self . chain )

2017-05-07 15:13:29 +02:00

2018-09-13 12:33:15 +02:00

def skip_if_no_py3_zmq ( self ) :

""" Attempt to import the zmq package and skip the test if the import fails. """

try :

import zmq # noqa

except ImportError :

raise SkipTest ( " python3-zmq module not available. " )

def skip_if_no_bitcoind_zmq ( self ) :

""" Skip the running test if dashd has not been compiled with zmq support. """

if not self . is_zmq_compiled ( ) :

raise SkipTest ( " dashd has not been built with zmq enabled. " )

def skip_if_no_wallet ( self ) :

""" Skip the running test if wallet has not been compiled. """

if not self . is_wallet_compiled ( ) :

raise SkipTest ( " wallet has not been compiled. " )

def skip_if_no_cli ( self ) :

""" Skip the running test if dash-cli has not been compiled. """

if not self . is_cli_compiled ( ) :

raise SkipTest ( " dash-cli has not been compiled. " )

def is_cli_compiled ( self ) :

""" Checks whether dash-cli was compiled. """

config = configparser . ConfigParser ( )

config . read_file ( open ( self . options . configfile ) )

return config [ " components " ] . getboolean ( " ENABLE_UTILS " )

def is_wallet_compiled ( self ) :

""" Checks whether the wallet module was compiled. """

config = configparser . ConfigParser ( )

config . read_file ( open ( self . options . configfile ) )

return config [ " components " ] . getboolean ( " ENABLE_WALLET " )

def is_zmq_compiled ( self ) :

""" Checks whether the zmq module was compiled. """

config = configparser . ConfigParser ( )

config . read_file ( open ( self . options . configfile ) )

return config [ " components " ] . getboolean ( " ENABLE_ZMQ " )

2018-10-20 16:08:02 +02:00

MASTERNODE_COLLATERAL = 1000

class MasternodeInfo :

2018-12-31 08:12:36 +01:00

def __init__ ( self , proTxHash , ownerAddr , votingAddr , pubKeyOperator , keyOperator , collateral_address , collateral_txid , collateral_vout ) :

self . proTxHash = proTxHash

self . ownerAddr = ownerAddr

self . votingAddr = votingAddr

self . pubKeyOperator = pubKeyOperator

self . keyOperator = keyOperator

self . collateral_address = collateral_address

self . collateral_txid = collateral_txid

self . collateral_vout = collateral_vout

2018-10-20 16:08:02 +02:00

class DashTestFramework ( BitcoinTestFramework ) :

2021-04-08 22:52:05 +02:00

def set_test_params ( self ) :

""" Tests must this method to change default values for number of nodes, topology, etc """

raise NotImplementedError

2018-09-13 12:33:15 +02:00

def skip_test_if_missing_module ( self ) :

self . skip_if_no_wallet ( )

2021-04-08 22:52:05 +02:00

def run_test ( self ) :

""" Tests must override this method to define test logic """

raise NotImplementedError

2019-12-05 19:28:33 +01:00

def set_dash_test_params ( self , num_nodes , masterodes_count , extra_args = None , fast_dip3_enforcement = False ) :

2018-10-20 16:08:02 +02:00

self . mn_count = masterodes_count

self . num_nodes = num_nodes

self . mninfo = [ ]

self . setup_clean_chain = True

# additional args

2019-12-05 19:28:33 +01:00

if extra_args is None :

extra_args = [ [ ] ] * num_nodes

assert_equal ( len ( extra_args ) , num_nodes )

2020-01-04 12:22:41 +01:00

self . extra_args = [ copy . deepcopy ( a ) for a in extra_args ]

2019-12-05 19:28:33 +01:00

self . extra_args [ 0 ] + = [ " -sporkkey=cP4EKFyJsHT39LDqgdcB43Y3YXjNyjb5Fuas1GQSeAtjnZWmZEQK " ]

2019-01-29 15:54:38 +01:00

self . fast_dip3_enforcement = fast_dip3_enforcement

if fast_dip3_enforcement :

2019-12-05 19:28:33 +01:00

for i in range ( 0 , num_nodes ) :

2020-01-04 12:22:41 +01:00

self . extra_args [ i ] . append ( " -dip3params=30:50 " )

2019-01-23 17:36:51 +01:00

2020-12-12 07:57:39 +01:00

# make sure to activate dip8 after prepare_masternodes has finished its job already

self . set_dash_dip8_activation ( 200 )

2020-01-07 13:49:51 +01:00

# LLMQ default test params (no need to pass -llmqtestparams)

self . llmq_size = 3

self . llmq_threshold = 2

2021-02-01 17:10:19 +01:00

# This is nRequestTimeout in dash-q-recovery thread

self . quorum_data_thread_request_timeout_seconds = 10

2021-01-28 23:33:18 +01:00

# This is EXPIRATION_TIMEOUT in CQuorumDataRequest

self . quorum_data_request_expiration_timeout = 300

2020-01-04 12:21:16 +01:00

def set_dash_dip8_activation ( self , activate_after_block ) :

2020-12-28 12:21:01 +01:00

self . dip8_activation_height = activate_after_block

2020-01-04 12:21:16 +01:00

for i in range ( 0 , self . num_nodes ) :

2020-12-28 12:21:01 +01:00

self . extra_args [ i ] . append ( " -dip8params= %d " % ( activate_after_block ) )

2021-01-08 20:56:32 +01:00

def activate_dip8 ( self , slow_mode = False ) :

# NOTE: set slow_mode=True if you are activating dip8 after a huge reorg

# or nodes might fail to catch up otherwise due to a large

# (MAX_BLOCKS_IN_TRANSIT_PER_PEER = 16 blocks) reorg error.

2020-12-28 12:21:01 +01:00

self . log . info ( " Wait for dip0008 activation " )

while self . nodes [ 0 ] . getblockcount ( ) < self . dip8_activation_height :

self . nodes [ 0 ] . generate ( 10 )

2021-01-08 20:56:32 +01:00

if slow_mode :

self . sync_blocks ( )

self . sync_blocks ( )

2020-01-04 12:21:16 +01:00

2020-01-07 13:49:51 +01:00

def set_dash_llmq_test_params ( self , llmq_size , llmq_threshold ) :

self . llmq_size = llmq_size

self . llmq_threshold = llmq_threshold

for i in range ( 0 , self . num_nodes ) :

self . extra_args [ i ] . append ( " -llmqtestparams= %d : %d " % ( self . llmq_size , self . llmq_threshold ) )

2018-10-20 16:08:02 +02:00

def create_simple_node ( self ) :

idx = len ( self . nodes )

2019-12-05 19:28:33 +01:00

self . add_nodes ( 1 , extra_args = [ self . extra_args [ idx ] ] )

2019-09-24 00:57:30 +02:00

self . start_node ( idx )

2018-10-20 16:08:02 +02:00

for i in range ( 0 , idx ) :

connect_nodes ( self . nodes [ i ] , idx )

def prepare_masternodes ( self ) :

2019-10-03 12:45:18 +02:00

self . log . info ( " Preparing %d masternodes " % self . mn_count )

2018-10-20 16:08:02 +02:00

for idx in range ( 0 , self . mn_count ) :

2019-04-04 08:08:44 +02:00

self . prepare_masternode ( idx )

def prepare_masternode ( self , idx ) :

bls = self . nodes [ 0 ] . bls ( ' generate ' )

address = self . nodes [ 0 ] . getnewaddress ( )

txid = self . nodes [ 0 ] . sendtoaddress ( address , MASTERNODE_COLLATERAL )

txraw = self . nodes [ 0 ] . getrawtransaction ( txid , True )

collateral_vout = 0

for vout_idx in range ( 0 , len ( txraw [ " vout " ] ) ) :

vout = txraw [ " vout " ] [ vout_idx ]

if vout [ " value " ] == MASTERNODE_COLLATERAL :

collateral_vout = vout_idx

self . nodes [ 0 ] . lockunspent ( False , [ { ' txid ' : txid , ' vout ' : collateral_vout } ] )

# send to same address to reserve some funds for fees

self . nodes [ 0 ] . sendtoaddress ( address , 0.001 )

ownerAddr = self . nodes [ 0 ] . getnewaddress ( )

votingAddr = self . nodes [ 0 ] . getnewaddress ( )

rewardsAddr = self . nodes [ 0 ] . getnewaddress ( )

port = p2p_port ( len ( self . nodes ) + idx )

2020-12-24 00:24:06 +01:00

ipAndPort = ' 127.0.0.1: %d ' % port

operatorReward = idx

operatorPayoutAddress = self . nodes [ 0 ] . getnewaddress ( )

2020-11-28 20:14:29 +01:00

submit = ( idx % 4 ) < 2

if ( idx % 2 ) == 0 :

2019-04-04 08:08:44 +02:00

self . nodes [ 0 ] . lockunspent ( True , [ { ' txid ' : txid , ' vout ' : collateral_vout } ] )

2020-12-24 00:24:06 +01:00

protx_result = self . nodes [ 0 ] . protx ( ' register_fund ' , address , ipAndPort , ownerAddr , bls [ ' public ' ] , votingAddr , operatorReward , rewardsAddr , address , submit )

2019-04-04 08:08:44 +02:00

else :

2018-12-31 08:12:36 +01:00

self . nodes [ 0 ] . generate ( 1 )

2020-12-24 00:24:06 +01:00

protx_result = self . nodes [ 0 ] . protx ( ' register ' , txid , collateral_vout , ipAndPort , ownerAddr , bls [ ' public ' ] , votingAddr , operatorReward , rewardsAddr , address , submit )

2020-11-28 20:14:29 +01:00

if submit :

proTxHash = protx_result

else :

proTxHash = self . nodes [ 0 ] . sendrawtransaction ( protx_result )

2019-04-04 08:08:44 +02:00

self . nodes [ 0 ] . generate ( 1 )

2020-12-24 00:24:06 +01:00

if operatorReward > 0 :

self . nodes [ 0 ] . protx ( ' update_service ' , proTxHash , ipAndPort , bls [ ' secret ' ] , operatorPayoutAddress , address )

2019-04-04 08:08:44 +02:00

self . mninfo . append ( MasternodeInfo ( proTxHash , ownerAddr , votingAddr , bls [ ' public ' ] , bls [ ' secret ' ] , address , txid , collateral_vout ) )

self . sync_all ( )

2018-10-20 16:08:02 +02:00

2019-10-03 12:45:18 +02:00

self . log . info ( " Prepared masternode %d : collateral_txid= %s , collateral_vout= %d , protxHash= %s " % ( idx , txid , collateral_vout , proTxHash ) )

2021-01-14 21:00:57 +01:00

def remove_masternode ( self , idx ) :

2019-04-04 08:08:44 +02:00

mn = self . mninfo [ idx ]

rawtx = self . nodes [ 0 ] . createrawtransaction ( [ { " txid " : mn . collateral_txid , " vout " : mn . collateral_vout } ] , { self . nodes [ 0 ] . getnewaddress ( ) : 999.9999 } )

2020-12-11 03:25:55 +01:00

rawtx = self . nodes [ 0 ] . signrawtransactionwithwallet ( rawtx )

2019-04-04 08:08:44 +02:00

self . nodes [ 0 ] . sendrawtransaction ( rawtx [ " hex " ] )

self . nodes [ 0 ] . generate ( 1 )

2018-12-31 08:12:36 +01:00

self . sync_all ( )

2019-04-04 08:08:44 +02:00

self . mninfo . remove ( mn )

2018-10-20 16:08:02 +02:00

2019-10-03 12:45:18 +02:00

self . log . info ( " Removed masternode %d " , idx )

2019-01-23 17:36:51 +01:00

def prepare_datadirs ( self ) :

# stop faucet node so that we can copy the datadir

2019-07-04 16:48:01 +02:00

self . stop_node ( 0 )

2019-01-23 17:36:51 +01:00

start_idx = len ( self . nodes )

for idx in range ( 0 , self . mn_count ) :

2021-01-22 15:58:07 +01:00

copy_datadir ( 0 , idx + start_idx , self . options . tmpdir , self . chain )

2019-01-23 17:36:51 +01:00

# restart faucet node

2019-09-24 00:57:30 +02:00

self . start_node ( 0 )

2020-12-06 00:39:38 +01:00

force_finish_mnsync ( self . nodes [ 0 ] )

2019-01-23 17:36:51 +01:00

2018-12-31 08:12:36 +01:00

def start_masternodes ( self ) :

2019-10-03 12:45:18 +02:00

self . log . info ( " Starting %d masternodes " , self . mn_count )

2018-12-31 08:12:36 +01:00

start_idx = len ( self . nodes )

2019-01-23 17:36:51 +01:00

2019-09-24 00:57:30 +02:00

self . add_nodes ( self . mn_count )

2019-01-23 17:36:51 +01:00

executor = ThreadPoolExecutor ( max_workers = 20 )

def do_connect ( idx ) :

2019-12-05 19:31:55 +01:00

# Connect to the control node only, masternodes should take care of intra-quorum connections themselves

connect_nodes ( self . mninfo [ idx ] . node , 0 )

2019-01-23 17:36:51 +01:00

jobs = [ ]

# start up nodes in parallel

for idx in range ( 0 , self . mn_count ) :

2020-03-30 11:28:05 +02:00

self . mninfo [ idx ] . nodeIdx = idx + start_idx

jobs . append ( executor . submit ( self . start_masternode , self . mninfo [ idx ] ) )

2019-01-23 17:36:51 +01:00

# wait for all nodes to start up

for job in jobs :

job . result ( )

jobs . clear ( )

# connect nodes in parallel

for idx in range ( 0 , self . mn_count ) :

jobs . append ( executor . submit ( do_connect , idx ) )

# wait for all nodes to connect

for job in jobs :

job . result ( )

jobs . clear ( )

executor . shutdown ( )

2020-03-30 11:28:05 +02:00

def start_masternode ( self , mninfo , extra_args = None ) :

args = [ ' -masternodeblsprivkey= %s ' % mninfo . keyOperator ] + self . extra_args [ mninfo . nodeIdx ]

if extra_args is not None :

args + = extra_args

self . start_node ( mninfo . nodeIdx , extra_args = args )

mninfo . node = self . nodes [ mninfo . nodeIdx ]

force_finish_mnsync ( mninfo . node )

2018-10-20 16:08:02 +02:00

def setup_network ( self ) :

2019-10-03 12:45:18 +02:00

self . log . info ( " Creating and starting controller node " )

2019-12-05 19:28:33 +01:00

self . add_nodes ( 1 , extra_args = [ self . extra_args [ 0 ] ] )

2019-09-24 00:57:30 +02:00

self . start_node ( 0 )

2018-10-20 16:08:02 +02:00

required_balance = MASTERNODE_COLLATERAL * self . mn_count + 1

2019-10-03 12:45:18 +02:00

self . log . info ( " Generating %d coins " % required_balance )

2018-10-20 16:08:02 +02:00

while self . nodes [ 0 ] . getbalance ( ) < required_balance :

2019-08-09 01:14:11 +02:00

self . bump_mocktime ( 1 )

2020-01-04 12:21:16 +01:00

self . nodes [ 0 ] . generate ( 10 )

2019-10-03 12:45:18 +02:00

num_simple_nodes = self . num_nodes - self . mn_count - 1

self . log . info ( " Creating and starting %s simple nodes " , num_simple_nodes )

for i in range ( 0 , num_simple_nodes ) :

2018-10-20 16:08:02 +02:00

self . create_simple_node ( )

2018-12-31 08:12:36 +01:00

2019-10-03 12:45:18 +02:00

self . log . info ( " Activating DIP3 " )

2019-01-29 15:54:38 +01:00

if not self . fast_dip3_enforcement :

2019-01-23 17:36:51 +01:00

while self . nodes [ 0 ] . getblockcount ( ) < 500 :

self . nodes [ 0 ] . generate ( 10 )

2018-12-31 08:12:36 +01:00

self . sync_all ( )

# create masternodes

self . prepare_masternodes ( )

2019-01-23 17:36:51 +01:00

self . prepare_datadirs ( )

2018-12-31 08:12:36 +01:00

self . start_masternodes ( )

2019-12-05 19:31:55 +01:00

# non-masternodes where disconnected from the control node during prepare_datadirs,

# let's reconnect them back to make sure they receive updates

for i in range ( 0 , num_simple_nodes ) :

connect_nodes ( self . nodes [ i + 1 ] , 0 )

2019-08-09 01:14:11 +02:00

self . bump_mocktime ( 1 )

2018-10-20 16:08:02 +02:00

self . nodes [ 0 ] . generate ( 1 )

# sync nodes

self . sync_all ( )

2020-12-12 07:57:39 +01:00

# Enable InstantSend (including block filtering) and ChainLocks by default

self . nodes [ 0 ] . spork ( " SPORK_2_INSTANTSEND_ENABLED " , 0 )

self . nodes [ 0 ] . spork ( " SPORK_3_INSTANTSEND_BLOCK_FILTERING " , 0 )

self . nodes [ 0 ] . spork ( " SPORK_19_CHAINLOCKS_ENABLED " , 0 )

self . wait_for_sporks_same ( )

2019-08-09 01:14:11 +02:00

self . bump_mocktime ( 1 )

2018-12-31 08:12:36 +01:00

2018-10-20 16:08:02 +02:00

mn_info = self . nodes [ 0 ] . masternodelist ( " status " )

2021-08-27 21:03:02 +02:00

assert len ( mn_info ) == self . mn_count

2018-10-20 16:08:02 +02:00

for status in mn_info . values ( ) :

2021-08-27 21:03:02 +02:00

assert status == ' ENABLED '

2018-10-20 16:08:02 +02:00

2019-03-18 14:50:44 +01:00

def create_raw_tx ( self , node_from , node_to , amount , min_inputs , max_inputs ) :

2021-08-27 21:03:02 +02:00

assert min_inputs < = max_inputs

2018-10-20 16:08:02 +02:00

# fill inputs

inputs = [ ]

balances = node_from . listunspent ( )

in_amount = 0.0

last_amount = 0.0

for tx in balances :

if len ( inputs ) < min_inputs :

input = { }

input [ " txid " ] = tx [ ' txid ' ]

input [ ' vout ' ] = tx [ ' vout ' ]

in_amount + = float ( tx [ ' amount ' ] )

inputs . append ( input )

elif in_amount > amount :

break

elif len ( inputs ) < max_inputs :

input = { }

input [ " txid " ] = tx [ ' txid ' ]

input [ ' vout ' ] = tx [ ' vout ' ]

in_amount + = float ( tx [ ' amount ' ] )

inputs . append ( input )

else :

input = { }

input [ " txid " ] = tx [ ' txid ' ]

input [ ' vout ' ] = tx [ ' vout ' ]

in_amount - = last_amount

in_amount + = float ( tx [ ' amount ' ] )

inputs [ - 1 ] = input

last_amount = float ( tx [ ' amount ' ] )

2021-08-27 21:03:02 +02:00

assert len ( inputs ) > = min_inputs

assert len ( inputs ) < = max_inputs

assert in_amount > = amount

2018-10-20 16:08:02 +02:00

# fill outputs

receiver_address = node_to . getnewaddress ( )

change_address = node_from . getnewaddress ( )

fee = 0.001

outputs = { }

outputs [ receiver_address ] = satoshi_round ( amount )

outputs [ change_address ] = satoshi_round ( in_amount - amount - fee )

rawtx = node_from . createrawtransaction ( inputs , outputs )

2020-12-11 03:25:55 +01:00

ret = node_from . signrawtransactionwithwallet ( rawtx )

2019-05-07 14:14:33 +02:00

decoded = node_from . decoderawtransaction ( ret [ ' hex ' ] )

ret = { * * decoded , * * ret }

return ret

2018-10-20 16:08:02 +02:00

2019-12-06 10:05:58 +01:00

def wait_for_tx ( self , txid , node , expected = True , timeout = 15 ) :

def check_tx ( ) :

try :

return node . getrawtransaction ( txid )

except :

return False

if wait_until ( check_tx , timeout = timeout , sleep = 0.5 , do_assert = expected ) and not expected :

raise AssertionError ( " waiting unexpectedly succeeded " )

2020-12-09 20:52:11 +01:00

def create_islock ( self , hextx ) :

tx = FromHex ( CTransaction ( ) , hextx )

tx . rehash ( )

request_id_buf = ser_string ( b " islock " ) + ser_compact_size ( len ( tx . vin ) )

inputs = [ ]

for txin in tx . vin :

request_id_buf + = txin . prevout . serialize ( )

inputs . append ( txin . prevout )

request_id = hash256 ( request_id_buf ) [ : : - 1 ] . hex ( )

message_hash = tx . hash

2021-03-15 03:49:38 +01:00

quorum_member = None

2020-12-09 20:52:11 +01:00

for mn in self . mninfo :

2021-03-15 03:49:38 +01:00

res = mn . node . quorum ( ' sign ' , 100 , request_id , message_hash )

if ( res and quorum_member is None ) :

quorum_member = mn

2020-12-09 20:52:11 +01:00

2021-03-15 03:49:38 +01:00

rec_sig = self . get_recovered_sig ( request_id , message_hash , node = quorum_member . node )

2020-12-09 20:52:11 +01:00

islock = msg_islock ( inputs , tx . sha256 , hex_str_to_bytes ( rec_sig [ ' sig ' ] ) )

return islock

2019-12-06 10:05:58 +01:00

def wait_for_instantlock ( self , txid , node , expected = True , timeout = 15 ) :

2019-10-01 16:14:26 +02:00

def check_instantlock ( ) :

2019-03-19 08:38:16 +01:00

try :

2019-10-01 16:14:26 +02:00

return node . getrawtransaction ( txid , True ) [ " instantlock " ]

2019-03-19 08:38:16 +01:00

except :

2019-10-01 16:14:26 +02:00

return False

2019-12-06 10:05:58 +01:00

if wait_until ( check_instantlock , timeout = timeout , sleep = 0.5 , do_assert = expected ) and not expected :

raise AssertionError ( " waiting unexpectedly succeeded " )

2018-10-20 16:08:02 +02:00

2019-12-06 10:05:58 +01:00

def wait_for_chainlocked_block ( self , node , block_hash , expected = True , timeout = 15 ) :

2019-10-02 02:11:10 +02:00

def check_chainlocked_block ( ) :

try :

block = node . getblock ( block_hash )

return block [ " confirmations " ] > 0 and block [ " chainlock " ]

except :

2019-01-18 11:51:31 +01:00

return False

2019-12-06 10:05:58 +01:00

if wait_until ( check_chainlocked_block , timeout = timeout , sleep = 0.1 , do_assert = expected ) and not expected :

raise AssertionError ( " waiting unexpectedly succeeded " )

2019-10-02 02:11:10 +02:00

2019-10-02 15:24:57 +02:00

def wait_for_chainlocked_block_all_nodes ( self , block_hash , timeout = 15 ) :

2019-10-02 02:11:10 +02:00

for node in self . nodes :

2019-12-06 10:05:58 +01:00

self . wait_for_chainlocked_block ( node , block_hash , timeout = timeout )

2019-10-02 02:11:10 +02:00

2019-10-02 15:24:57 +02:00

def wait_for_best_chainlock ( self , node , block_hash , timeout = 15 ) :

wait_until ( lambda : node . getbestchainlock ( ) [ " blockhash " ] == block_hash , timeout = timeout , sleep = 0.1 )

2019-10-02 02:11:10 +02:00

def wait_for_sporks_same ( self , timeout = 30 ) :

def check_sporks_same ( ) :

sporks = self . nodes [ 0 ] . spork ( ' show ' )

return all ( node . spork ( ' show ' ) == sporks for node in self . nodes [ 1 : ] )

wait_until ( check_sporks_same , timeout = timeout , sleep = 0.5 )

2019-01-18 11:51:31 +01:00

2020-03-30 10:37:49 +02:00

def wait_for_quorum_connections ( self , expected_connections , nodes , timeout = 60 , wait_proc = None ) :

2020-01-08 08:01:26 +01:00

def check_quorum_connections ( ) :

all_ok = True

2020-03-30 10:37:49 +02:00

for node in nodes :

2020-01-08 08:01:26 +01:00

s = node . quorum ( " dkgstatus " )

2021-01-16 03:59:42 +01:00

if ' llmq_test ' not in s [ " session " ] :

2020-01-08 08:01:26 +01:00

continue

if " quorumConnections " not in s :

all_ok = False

break

s = s [ " quorumConnections " ]

if " llmq_test " not in s :

all_ok = False

break

cnt = 0

for c in s [ " llmq_test " ] :

if c [ " connected " ] :

cnt + = 1

if cnt < expected_connections :

all_ok = False

break

2020-03-27 22:59:02 +01:00

if not all_ok and wait_proc is not None :

wait_proc ( )

2020-01-08 08:01:26 +01:00

return all_ok

2020-03-31 07:06:41 +02:00

wait_until ( check_quorum_connections , timeout = timeout , sleep = 1 )

2020-01-08 08:01:26 +01:00

2020-04-15 16:18:05 +02:00

def wait_for_masternode_probes ( self , mninfos , timeout = 30 , wait_proc = None ) :

2020-03-30 14:51:26 +02:00

def check_probes ( ) :

2020-04-15 16:18:05 +02:00

def ret ( ) :

if wait_proc is not None :

wait_proc ( )

return False

2020-03-30 14:51:26 +02:00

for mn in mninfos :

2020-04-15 16:18:05 +02:00

s = mn . node . quorum ( ' dkgstatus ' )

2021-01-16 03:59:42 +01:00

if ' llmq_test ' not in s [ " session " ] :

2020-04-15 16:18:05 +02:00

continue

if " quorumConnections " not in s :

return ret ( )

s = s [ " quorumConnections " ]

if " llmq_test " not in s :

return ret ( )

for c in s [ " llmq_test " ] :

if c [ " proTxHash " ] == mn . proTxHash :

continue

if not c [ " outbound " ] :

mn2 = mn . node . protx ( ' info ' , c [ " proTxHash " ] )

if [ m for m in mninfos if c [ " proTxHash " ] == m . proTxHash ] :

# MN is expected to be online and functioning, so let's verify that the last successful

# probe is not too old. Probes are retried after 50 minutes, while DKGs consider a probe

# as failed after 60 minutes

if mn2 [ ' metaInfo ' ] [ ' lastOutboundSuccessElapsed ' ] > 55 * 60 :

return ret ( )

else :

# MN is expected to be offline, so let's only check that the last probe is not too long ago

if mn2 [ ' metaInfo ' ] [ ' lastOutboundAttemptElapsed ' ] > 55 * 60 and mn2 [ ' metaInfo ' ] [ ' lastOutboundSuccessElapsed ' ] > 55 * 60 :

return ret ( )

2020-03-30 14:51:26 +02:00

return True

wait_until ( check_probes , timeout = timeout , sleep = 1 )

2020-03-30 10:37:49 +02:00

def wait_for_quorum_phase ( self , quorum_hash , phase , expected_member_count , check_received_messages , check_received_messages_count , mninfos , timeout = 30 , sleep = 0.1 ) :

2019-10-02 02:11:10 +02:00

def check_dkg_session ( ) :

2019-01-18 11:51:31 +01:00

all_ok = True

2020-01-07 13:49:51 +01:00

member_count = 0

2020-03-30 10:37:49 +02:00

for mn in mninfos :

2019-02-01 08:49:01 +01:00

s = mn . node . quorum ( " dkgstatus " ) [ " session " ]

2020-01-07 13:49:51 +01:00

if " llmq_test " not in s :

continue

member_count + = 1

s = s [ " llmq_test " ]

2020-03-26 13:25:24 +01:00